Abstract

We present CloudEval-YAML, a practical benchmark for cloud configuration generation. CloudEval-YAML tackles the diversity challenge by focusing on YAML, the de facto standard of numerous cloud-native tools. We develop the CloudEval-YAML benchmark with practicality in mind: the dataset consists of hand-written problems with unit tests targeting practical scenarios. We further enhanced the dataset to meet practical needs by rephrasing questions in a concise, abbreviated, and bilingual manner. The dataset consists of 1011 problems that take more than 1200 human hours to complete. To improve practicality during evaluation, we build a scalable evaluation platform for CloudEval-YAML that achieves a 20 times speedup over a single machine. To the best of our knowledge, the CloudEval-YAML dataset is the first hand-written dataset targeting cloud-native applications. We present an in-depth evaluation of 12 LLMs, leading to a deeper understanding of the problems and LLMs, as well as effective methods to improve task performance and reduce cost.

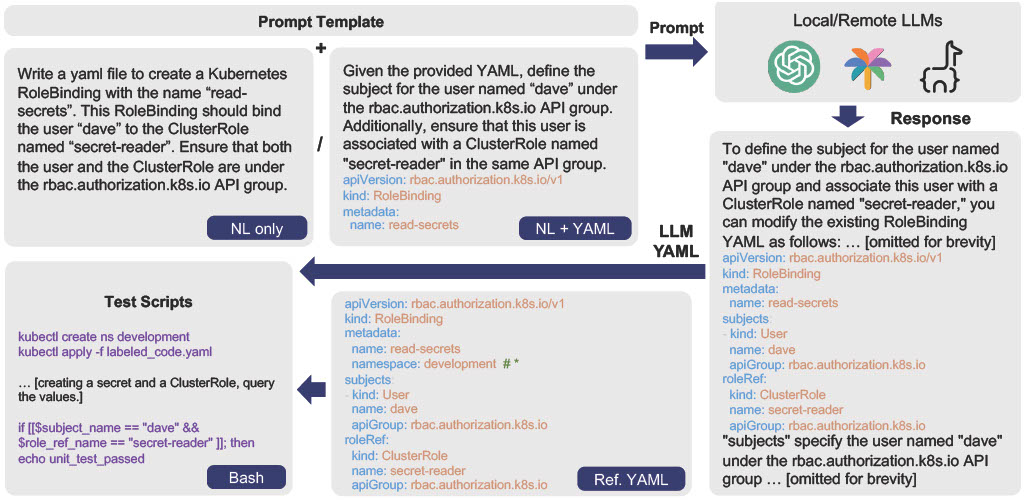

Dataset

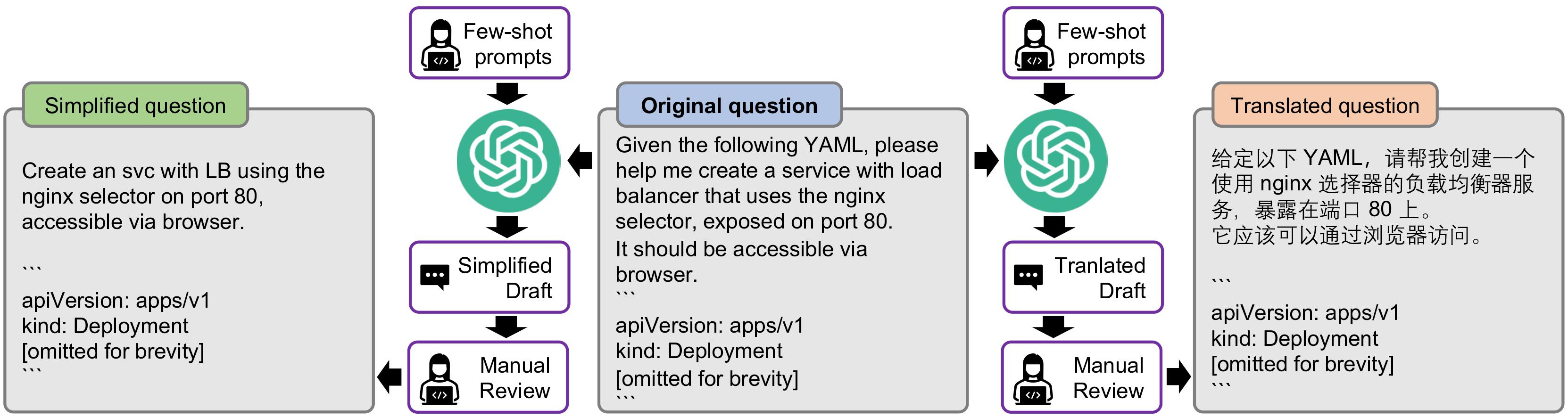

Data Augmentation

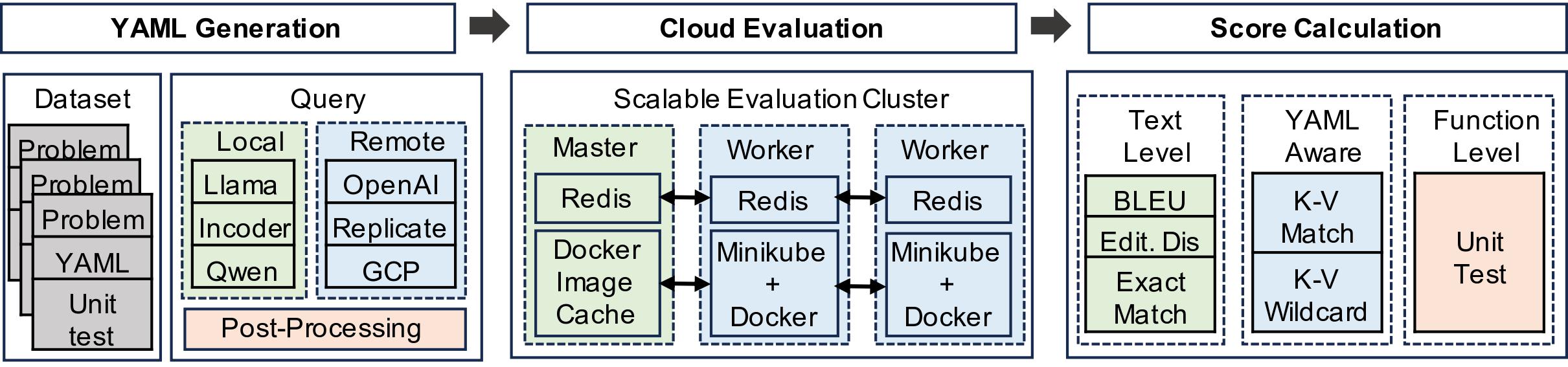

Evaluation Platform

Leaderboard